Raspberry Pi Clustering – Part 1

Note: The Pi3 figures in this may be questionable since I have since discovered it was overheating and scaling its clock. A future post will address this. Since I’m interested in real-world performance though, I’m not going to completely discount these results since overheating is a factor to consider!

The other day I came up with a slightly daft idea: deploy the website I’m currently developing to a cluster of raspberry pis rather than my usual Linode setup. Seems like a crazy idea? Yeah, probably! But I still want to try it!

Why?

My linode setup is getting a bit complicated. Right now I have various mostly unmaintained sites running on a selection of docker containers, but this has its own problems: notably running out of disk space on the fairly small root partition. This whole setup needs rebuilding really. I’m tempted to just deploy it all with Ansible directly to the host. What does this have to do with pi clustering? well a pi cluster is a nice way of trying this out!

There Is Some History Here!

The Raspberry Pi website has itself been hosted on pis a couple of times, most recently it was announced the Pi3 launch was partly hosted on Pi2s!

It’s also possible to get hosting provided on a Pi3 but part of the fun of this idea is clustering! (though I will do some tests to see how it compares to a single pi3)

The original pi was said to not be a good choice since its performance for cost wasn’t good enough. That said, the zeros I’m using here aren’t actually too far off!

The Setup: My Initial Idea

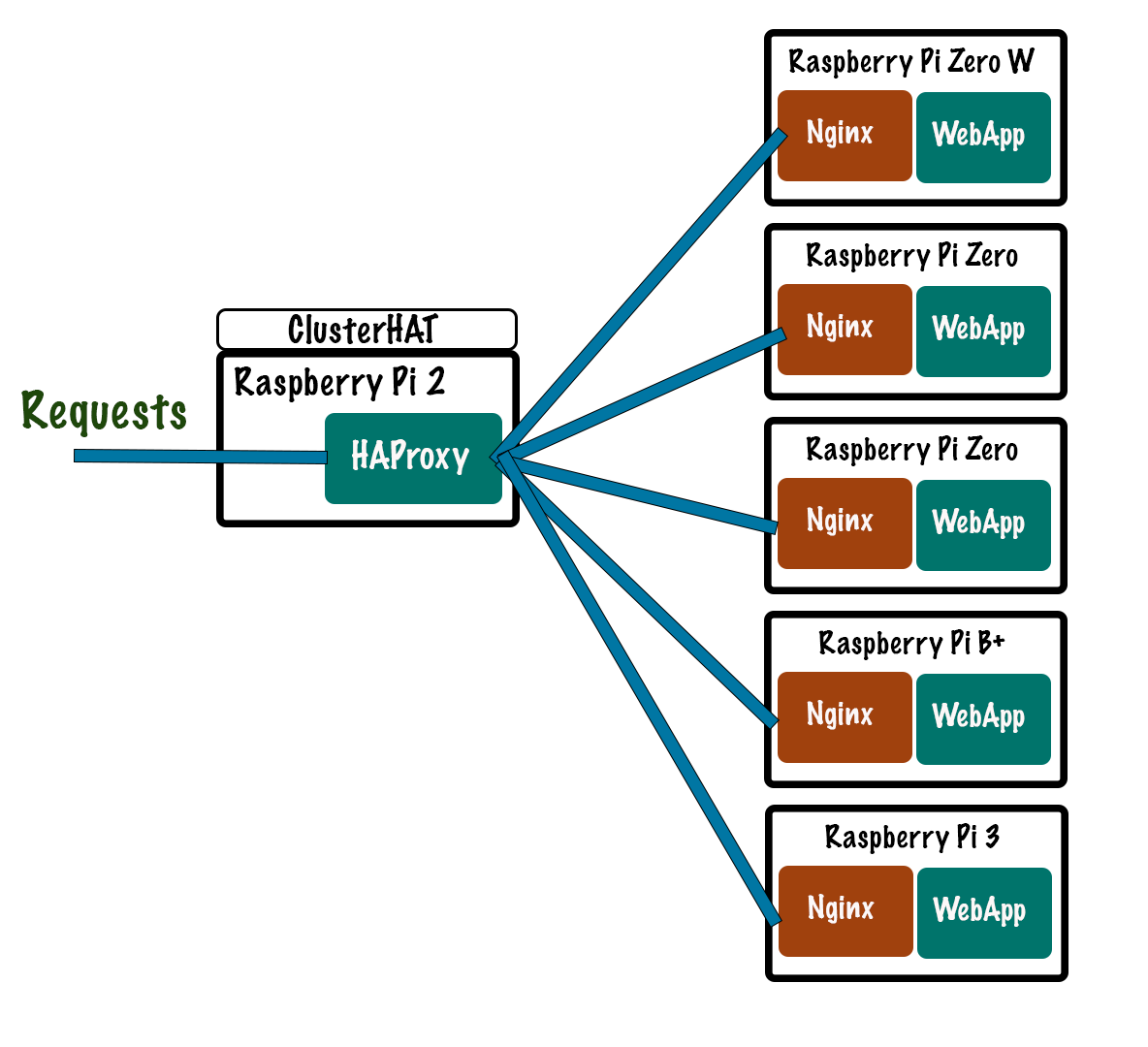

My first attempt was to deploy onto a ClusterHat (https://clusterhat.com/) with some zeros, an old model B+ (512Mb ram version) and a Pi3 for good measure and comparisons. The setup was something like this:

My initial setup

What do the parts do?

| Component | Purpose |

|---|---|

| HAProxy | Load balancer. Distributes the load among the other servers.Nginx can also do this, but I chose HAProxy because it supports easily disabling nodes for maintainance (see this ansible example) |

| Nginx | Web cache. Reduces load on the web app by serving pregenerated responses where possible |

| WebApp | This is the Spark Java (not Apache Spark!) based app I want to deploy. Not the best choice maybe, but I decided to try it before deciding on using a pi! |

The thinking behind this setup was that the webapp would be slow (it is!) but the nginx server on each node can be a cache. haproxy then load balances between these caches. When stress testing with JMeter it became clear that the HAProxy install on the head node was actually the bottleneck here. Still let’s see what this is capable of!

I used Apache Benchmark to get some ideas of the performance of this :

$ ab -kc 100 -n 10000 http://piclusternode0/

This is ApacheBench, Version 2.3 <$Revision: 1796539 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking piclusternode0 (be patient)

Completed 1000 requests

Completed 2000 requests

Completed 3000 requests

Completed 4000 requests

Completed 5000 requests

Completed 6000 requests

Completed 7000 requests

Completed 8000 requests

Completed 9000 requests

Completed 10000 requests

Finished 10000 requests

Server Software: nginx/1.10.3

Server Hostname: piclusternode0

Server Port: 80

Document Path: /

Document Length: 556 bytes

Concurrency Level: 100

Time taken for tests: 11.841 seconds

Complete requests: 10000

Failed requests: 0

Keep-Alive requests: 0

Total transferred: 9144496 bytes

HTML transferred: 5560000 bytes

Requests per second: 844.50 [#/sec] (mean)

Time per request: 118.413 [ms] (mean)

Time per request: 1.184 [ms] (mean, across all concurrent requests)

Transfer rate: 754.16 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 2 43 203.7 21 3165

Processing: 6 75 49.2 67 988

Waiting: 6 75 48.6 66 983

Total: 12 118 207.8 88 3215

Percentage of the requests served within a certain time (ms)

50% 88

66% 91

75% 93

80% 94

90% 108

95% 173

98% 433

99% 1152

100% 3215 (longest request)

Thoughts On First Results

That’s not actually bad for a cluster of low power machines. Running it a couple of times and watching the CPU load on the load balancer suggests the bottleneck here may again be the HAProxy node.

It turns out that HAProxy is mostly single threaded. It is possible to make it multiprocess, but then you lose some of the ability to control it and get metrics out of it. I adjusted the config to run four processes (the pi2 has four CPU cores) but the results were actually quite similar so I guess it was just at its single core limit or maybe the network bandwidth was becoming a problem.

In reality, this isn’t a very realistic situation for two reasons though:

- Not all hits will be to the homepage – some will require loading data via the webapp and so will not be cachable

- I intend to use TLS (aka HTTPS) for this site eventually, which will significantly increase load.

- The site I want to host on this will involve some POST requests, requiring database writes. This means the load will be a mix of IO and CPU-bound requests.

How Does This Compare To A Single Pi?

It’s worth stopping to consider how one Pi performs. Here are some numbers: (All 10000 connections, 100 at a time) Note these kept changing as I tested, so they’re not really incredibly accurate.

| Metric | Cluster | Single Pi Zero | Single Pi 3 |

|---|---|---|---|

| Mean Time Per Request (ms) | 118.413 | 225.442 | 62.771 |

| 95% Time (ms) | 173 | 280 | 62 |

| Requests Per Second (mean) | 844.50 | 443.57 | 1593.10 |

So… A single Pi3 does a better job – that’s actually not all that surprising given it’s more powerful than the average node. I tried increasing its weight in the cluster, but I think it is hitting IO bandwidth limits on the balancer node.

Perhaps a better test would be to hit an endpoint that does not benefit from caching. This is going to stress the CPU far more.

| Metric | Cluster | Cluster (No Nginx) | Single Pi Zero | Single Pi Zero (No Nginx) | Single Pi 3 | Single Pi 3 (No Nginx) |

|---|---|---|---|---|---|---|

| Mean Time Per Request (ms) | 1058.234 | 430.534 | 6354.980 | 6588.006 | 1877.685 | 5236.815 |

| 95% Time (ms) | 5476 | 1187 | 7060 | 7384 | 5053 | 655 |

| Requests Per Second (mean) | 94.50 | 232.27 | 15.74 | 15.18 | 53.26 | 422.27 |

It’s worth noting the pi3 wasn’t actually that heavily loaded (about 50% per core) – It seems the nginx proxy (which in this case was just passing data through) might be the limit here hence I did the same test but going directly to the Java webapp. I suspect the config I wrote to optimise caching by blocking multiple requests until one origin request has completed is limiting performance more than I expected! On the zero this has no effect though, because it’s single-cored.

Summary

It seems clear that you’d need a very large number of zeros to beat a Pi3 – but I kinda knew that anyway. It does seem though like for CPU-bound workloads a cluster of Pi3s (or similar – more on that later) could be an advantage.

It seems for HTTP easily cached workloads, the cluster is really no benefit since the IO bandwidth of the load balancer pi is lower than that same pi would host directly. For more CPU-bound workloads though, it could help. I’m going to keep working on this idea, including:

- Using Orange Pis (if I can get them to work that is!)

- Using a Pine64 as the load balancer

- Putting Nginx the other side of HAproxy

- The theory being for anything the cache is useful for, a single pi can cope

- As I mentioned before, HAProxy is a bit nicer to configure for updates

- I suspect this won’t help once TLS is added though

- Combinations of these

- Add TLS into the mix (since every site should these days!)